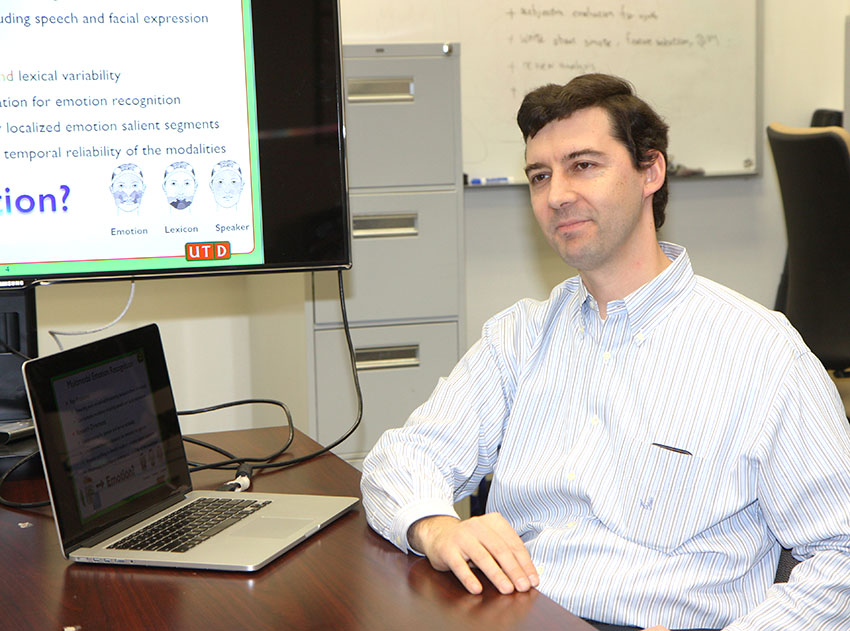

Dr. Carlos Busso's work analyzed the limitations in solely detecting emotions from speech or facial recognition, and presented the benefits of using both modalities at the same time.

Dr. Carlos Busso, assistant professor of electrical engineering in the Erik Jonsson School of Engineering and Computer Science, is the inaugural recipient of a 10-Year Technical Impact Award given by the Association for Computing Machinery International Conference on Multimodal Interaction.

The award was given for Busso’s work on one of the first studies about audiovisual emotion recognition. The work analyzed the limitations in solely detecting emotions from speech or facial recognition, and discussed the benefits of using both modalities at the same time.

“We were not just combining the modalities to get better performance,” Busso said. “We looked at why you get better performance.”

The paper has been cited about 380 times, he said.

With a background in speech processing, Busso began his doctoral studies at the University of Southern California in 2004. He studied how other modalities can complement speech processing.

“I have always been interested in human communication,” Busso said. “Not only what we say, but how we say it — the subtle, nonverbal behaviors that we express to others make a big difference in what we are communicating.”

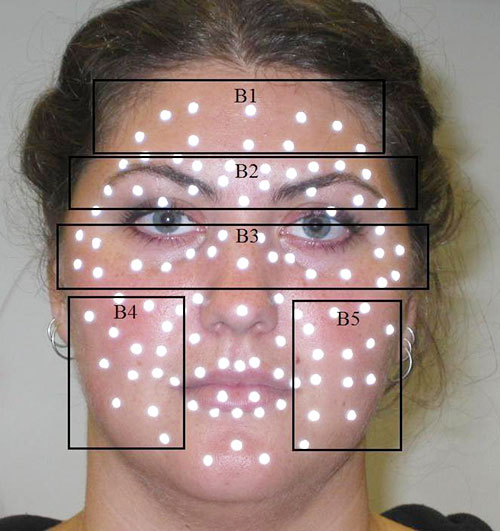

The five areas of an actress studied for facial recognition of emotions.

Take the emotions of happiness and anger. With speech processing alone, they can be easily confused — both emotions are characterized by behaviors such as shorter inter-word silence, higher pitch and dramatic increases of energy.

“We have a lot of trouble distinguishing between the two if you only listen to audio, but when you see a smile on the face, that makes the whole difference,” Busso said. “In our study, we demonstrated which emotions are likely to be confused using only one modality, and we fused them together and found that you get more accurate emotion classification.”

In the study, funded by the National Science Foundation and the U.S. Army, an actress read 258 sentences demonstrating four emotions: happiness, sadness, anger and a neutral state. They used a microphone to record audio, extracting features related with the fundamental frequency and intensity, and a motion capture system to record the facial motion data — capturing detailed facial muscle activity of the subjects as she expressed her emotions. The emotions were analyzed using audio and facial expression separately, and then a fusion of the two modalities.

Some of the more interesting findings of the study included that the cheek area gives valuable information for emotion classification, and that eyebrows, which were widely used in facial expression recognition, give lower classification performance.

The researchers noted that the best fusion techniques depended on the application and that the modalities can also provide complementary information when one audio or facial expression is limited — such as if someone is wearing a beard, mustache or glasses, or when a sound signal is not reliable. Also, a pair of emotions that are confused in only one modality can be resolved using audiovisual fusion.

About 10 years ago, human computer interaction studies were limited to either speech or facial recognition, and applications were mostly limited to telemarketing — determining whether customers were satisfied with call agents, Busso said.

Today, applications for human computer interaction are on the rise and there is gaining interest in the field, as evidenced in recent years by the establishment of the Institute of Electrical and Electronics Engineers’ Transactions on Affective Computing, which is solely dedicated to understanding emotions from a computational perspective; a biannual conference on affective computing; and more sessions at speech processing conferences on emotions.

Future applications of the work could include medical practitioners using human computer interaction with patients suffering from depression or Parkinson’s disease to better assess whether therapies are helping improve mood and certain behaviors; with entertainment to track the reaction of video game players; and in security and defense to highlight potential threats in situations where threatening behaviors are displayed or people are highly emotional.

Busso became the inaugural recipient of the award in late 2014. As the inaugural recipient, the paper was chosen from all other 10-year old papers submitted to the International Conference on Multimodal Interaction (ICMI).

“It wasn’t until a couple of years ago that a co-author mentioned to me that our paper was ICMI’s most-cited at that time,” Busso said. “It is nice to be recognized, but I am more proud of the influence the paper has had in the area over 10 years, and is still having today.”